Let's Try - Amazon Data Firehose Delivery to Apache Iceberg

Introduction

Recently I stumbled upon a new feature in Amazon Data Firehose that has the potential to make working with Apache Iceberg tables in AWS much easier. The Amazon Data Firehose team has added preview support for delivering data to Apache Iceberg tables.

I was keen to give it a go, as right now the process of delivering data to Iceberg usually requires lambdas and event triggers on S3 landing. This feature potentially removes the need to write any code at all!

In this very short guide, I'll walk you through the process of setting up an Iceberg table in Amazon Athena, creating an Amazon Data Firehose delivery stream, and delivering data to the Iceberg table.

Pre-requisites

To follow along with this post, you must do some basic setup in Athena, S3 and Glue. If you have used Athena before, there's a good chance you already have this setup (possibly with a different bucket). Feel free to skip this section if you feel confident you have the required setup.

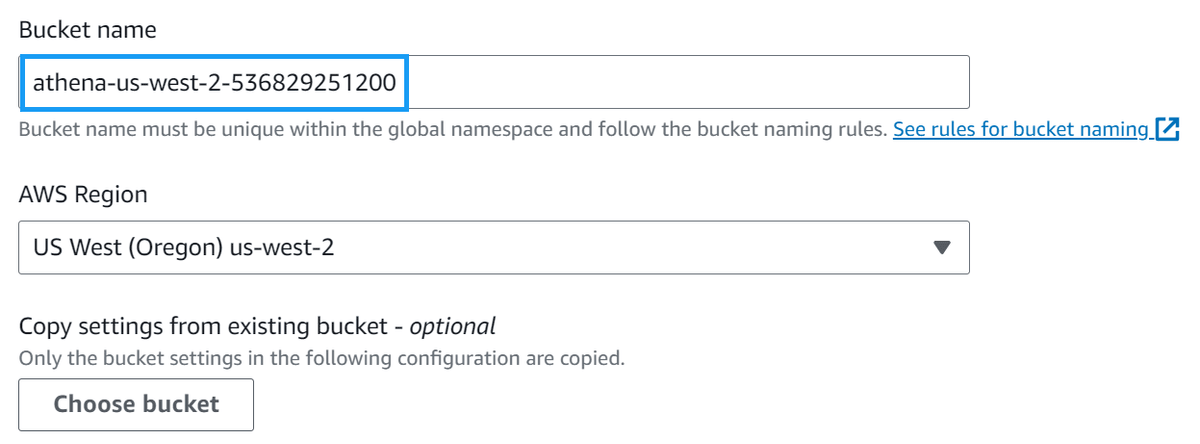

S3

Navigate to the AWS S3 console to start with and create an Athena query bucket

Give the bucket a name such as athena-<region>-<account-id> and click Create bucket. (For example, athena-us-west-2-123456789012.)

Note: for this post, I'll be using the us-west-2 region as it is one of the regions that supports the new automatic compaction feature.

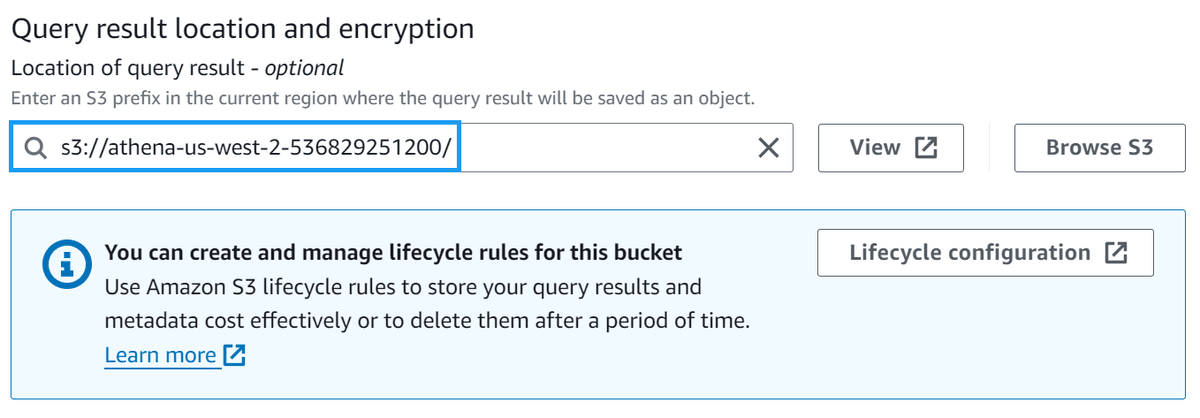

Athena

Navigate to the AWS Athena settings console and click Manage under the Query result and encryption settings section.

Change the Location of query results to the bucket you created in the previous step, and click Save.

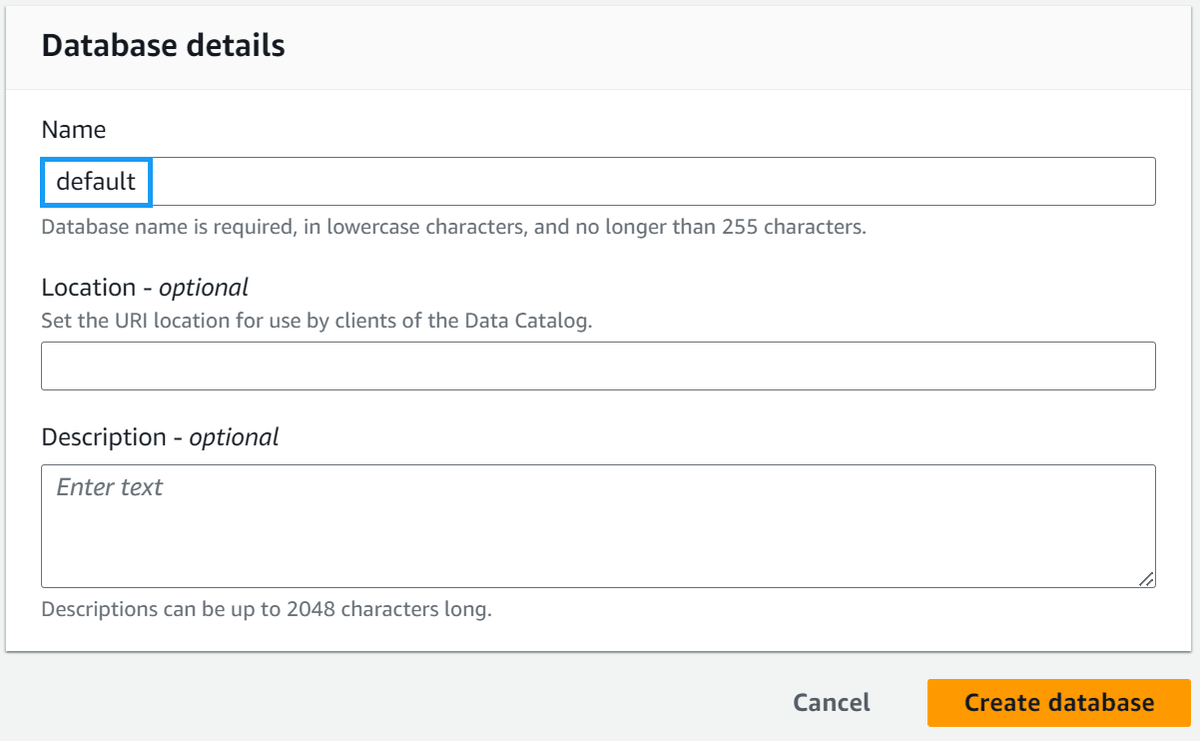

Glue

Navigate to the AWS Glue databases console and check to see if you have a database called default. If you do not, create one.

Helper Script

To help demonstrate the glue table statistics feature, I've created a script to do much of the tedious parts of creating an Iceberg table for you. You can find the script here.

At a high level, the script does the following:

- Create an S3 bucket that Iceberg will use to store data and metadata

- Create SQL files for:

- Creating an Iceberg table

- Deleting the tables when we're done

- Creating an IAM role for Firehose that is used to deliver data to the Iceberg table

- Creates an Amazon Firehose delivery stream that delivers data to the Iceberg table

- Generates 1000 records and sends them to the Firehose delivery stream

Let's grab the script and run it so you can follow along.

Note: You must set up AWS credentials on your machine for this to work. If you don't have them set up, you can follow the AWS CLI configuration guide.

# Download the script, make it executable

curl https://gist.githubusercontent.com/t04glovern/04f6f2934353eb1d0fffd487e9b9b6a3/raw \

> lets-try-iceberg.py \

&& chmod +x lets-try-iceberg.py

# Create a virtual env (optional)

python3 -m venv .venv

source .venv/bin/activate

# Install the dependencies

pip3 install boto3

# Run the script

./lets-try-iceberg.py --table lets_try_iceberg_firehose --firehoseHave a look at the output, and you should see something like the following - You may see an error about the role not being authorized to perform glue:GetTable, ignore this for now as we will be creating the table manually.

Take note of the bucket name, as we will be using this later as well. In this case, the bucket name is iceberg-sample-data-970045.

# INFO:root:Bucket iceberg-sample-data-970045 does not exist, creating it...

# INFO:root:IAM role lets-try-iceberg-firehose-role already exists

# INFO:root:IAM policy lets-try-iceberg-firehose-policy already exists, updating it...

# INFO:root:Role arn:aws:iam::012345678901:role/lets-try-iceberg-firehose-role is now available.

# ERROR:root:Failed to create Firehose delivery stream: An error occurred (InvalidArgumentException) when calling the CreateDeliveryStream operation: Role arn:aws:iam::012345678901:role/lets-try-iceberg-firehose-role is not authorized to perform: glue:GetTable for the given table or the table does not exist.If you check the directory you ran the script from, you should see several files created:

$ ls -l

# -rw-r--r-- 1 nathan staff 366 10 Jul 19:24 1-athena-iceberg-create-table.sql

# -rw-r--r-- 1 nathan staff 403 10 Jul 19:25 2-athena-create-temp-table.sql

# -rw-r--r-- 1 nathan staff 94 10 Jul 19:25 3-insert-into-iceberg-from-temp-table.sql

# -rw-r--r-- 1 nathan staff 62 10 Jul 19:25 4-cleanup-temp-table.sql

# -rw-r--r-- 1 nathan staff 50 10 Jul 19:25 5-cleanup-iceberg-table.sqlThe only files we care about for this guide are 1-athena-iceberg-create-table.sql and 5-cleanup-iceberg-table.sql, as we will be doing data load from Amazon Data Firehose.

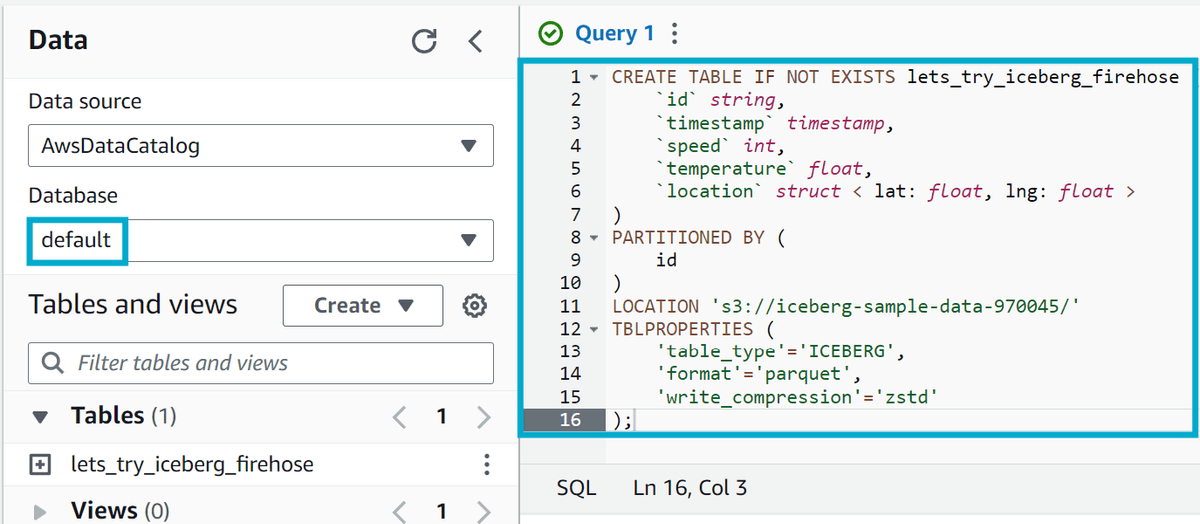

Create the Iceberg Table

Head over to the AWS Athena console and ensure that the default database is selected.

Take the contents of the 1-athena-iceberg-create-table.sql file and paste it into the query editor. Click Run to create the table.

You should see a new table called lets_try_iceberg_statistics under the Tables section of the default database.

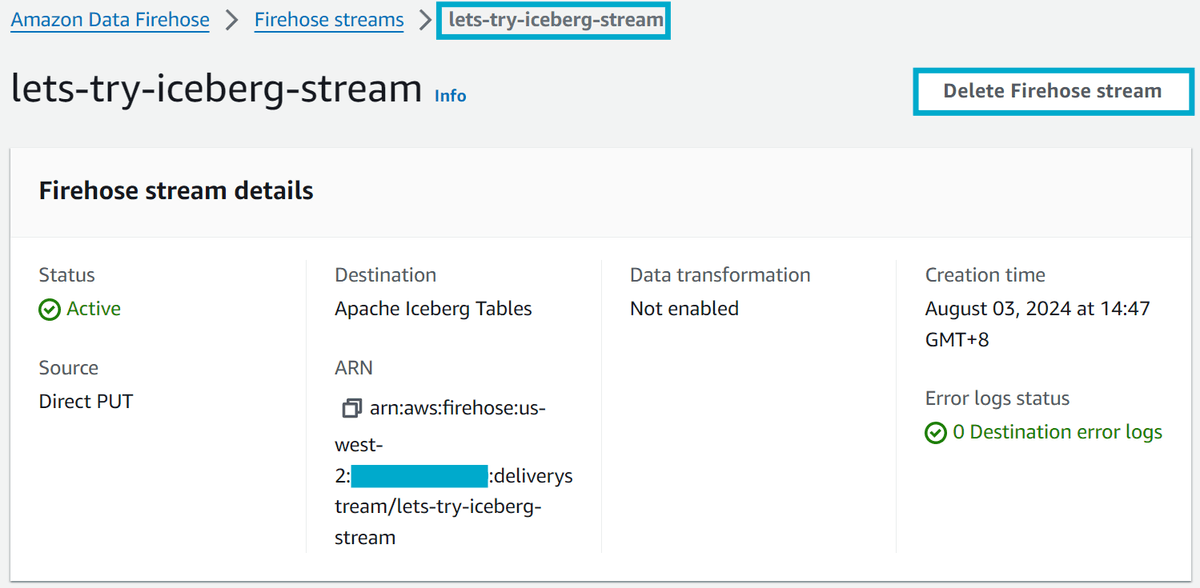

Create Amazon Data Firehose Delivery Stream

Now that we have our Iceberg table created we can create the Firehose delivery stream. This can either be done via the [AWS Firehose console or the helper script.

Important note: Remember to replace the bucket value with the bucket name you noted earlier!

./lets-try-iceberg.py \

--table lets_try_iceberg_firehose \

--bucket iceberg-sample-data-<number> \

--firehose

# INFO:root:IAM role lets-try-iceberg-firehose-role already exists

# INFO:root:IAM policy lets-try-iceberg-firehose-policy already exists, updating it...

# INFO:root:Role arn:aws:iam::012345678901:role/lets-try-iceberg-firehose-role is now available.

# INFO:root:Created Firehose delivery stream: arn:aws:firehose:us-west-2:012345678901:deliverystream/lets-try-iceberg-streamDeliver Data to the Iceberg Table with Firehose

Now that we have the Firehose delivery stream set up, we can deliver data to the Iceberg table by generating some sample data and putting it into the Firehose delivery stream.

./lets-try-iceberg.py \

--table lets_try_iceberg_firehose \

--bucket iceberg-sample-data-<number> \

--firehose-load

# INFO:root:Successfully put record to Firehose: n2qDPQp2HfiJd+SXhqjssjKz5+yQuen/58Yac8qMa4gQEhp/E/2L0MEbkBbRZzocQll6Yc4lGbyiDsZLudVli0b/xr0axGQD0MOcaIyZ9+nFy3QpYMvW9Zf0jsW8Fi3GLMRCG+k9OZMA25Bfc1HgUk7i7q4AAzjN8D8XPTivPFC5slrdRDLh+4VywgXW5YlXtRW2dnqKQzDoArjdUSeUIPJyYXq2vdUR

# INFO:root:Successfully put record to Firehose: xM5PqCJAhOlN9Vj1IVfDcM3fX0eCMR5pfcdqr0kytx2PqD9zNxW3y+IZMig6i61EfiwTffV80vAlASQFoMylb3GjMwaOZgMW3PmB3LOLDfRcHJh6lYTMaoT8tBRJiAp88OLy2fFs2YI+wu8/3M865jSCc+msHmnr3gVofGx1QuN8Fg19w2SBtldVhEN+jAqHrzU7DlL5yubdtIC2/9czq6rleaNkJyZV

# INFO:root:Successfully put record to Firehose: p/IiOnvpRgkOdINyptyyKvdmexKXcQsdF/IKZUnFurSc3VaeQOEYdBU7V+mekGKWTKlr9cC0gaO3Lc2Ef8+xzNQmDGJ/Pg3jXXBLQhcNyCxAjpKSI8lJfGy1tiobk3QC+jldvI0zZgx+9cKVQ2UpXy0IfVtn21iG77Pxx/jZCNtJpktSdx2yXbNcGnFiuisT5jWivGBJjSAshXqt7SfQ3kKQjU4OVlcgView Iceberg Table Data

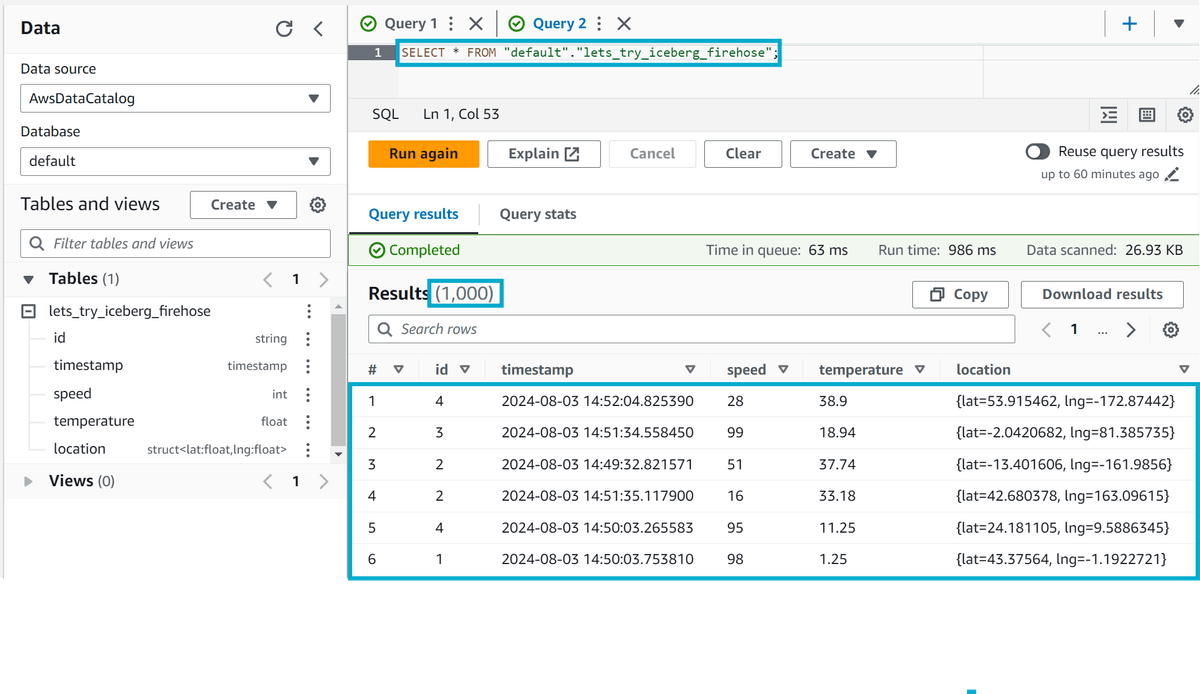

Head over to the AWS Athena console and ensure that the default database is selected.

You can now query the Iceberg table to see the data that was delivered by Firehose.

SELECT * FROM lets_try_iceberg_firehose;You should see the data that was delivered by Firehose.

Cleanup

Head to the Amazon Data Firehose console and Delete the delivery stream that was created by the script.

Then you can use the 5-cleanup-iceberg-table.sql file to clean up the Iceberg table in Athena.

-- 5-cleanup-iceberg-table.sql

DROP TABLE IF EXISTS lets_try_iceberg_firehose;Then, navigate to the AWS S3 console and Empty then Delete the bucket that was created by the script.

Finally, navigate to the AWS IAM Roles console and delete the role (lets-try-iceberg-firehose-role) and policy (lets-try-iceberg-firehose-policy) that were created by the script.

Summary

I'm excited for this feature to be generally available, as it has the potential to make working with Iceberg tables in AWS much easier. For now, I would not recommend using this in production as it is still in preview.

If you have any questions, comments or feedback, please get in touch with me on Twitter @nathangloverAUS, LinkedIn or leave a comment below!