Dune Coloring Book using CGAN & TensorFlow

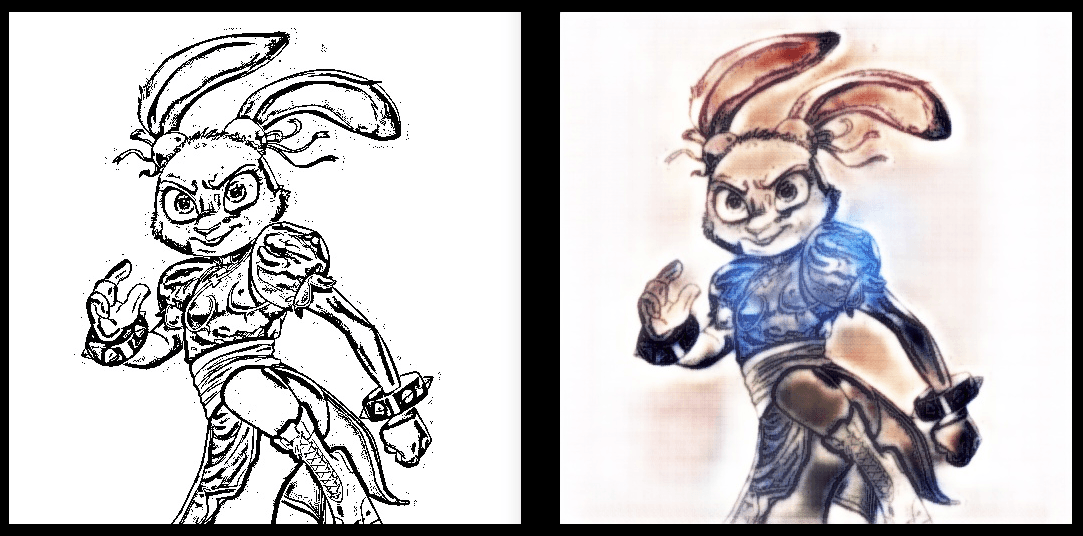

I'll preface this post by just letting you know that the results while interesting are not crash hot. It was however a really interesting experience into how deep learning can be applied to intriguing problems like coloring images.

Nathan Glover

Introduction

This week was a good one as I finally took a leap and purchased a RTX 2080 TI under the guidance that I would begin to teach myself deep learning concepts and data science. After spending a couple hours looking at a ad-hoc tutorials I began to lose interest as It was difficult for me to learn some of the more mathematical concepts without anything practical to apply it to.

A Dune coloring book exists and it is glorious. It also sparked a really dangerous thought in my mind... could I possibly use machine learning to color the book?

Outcome

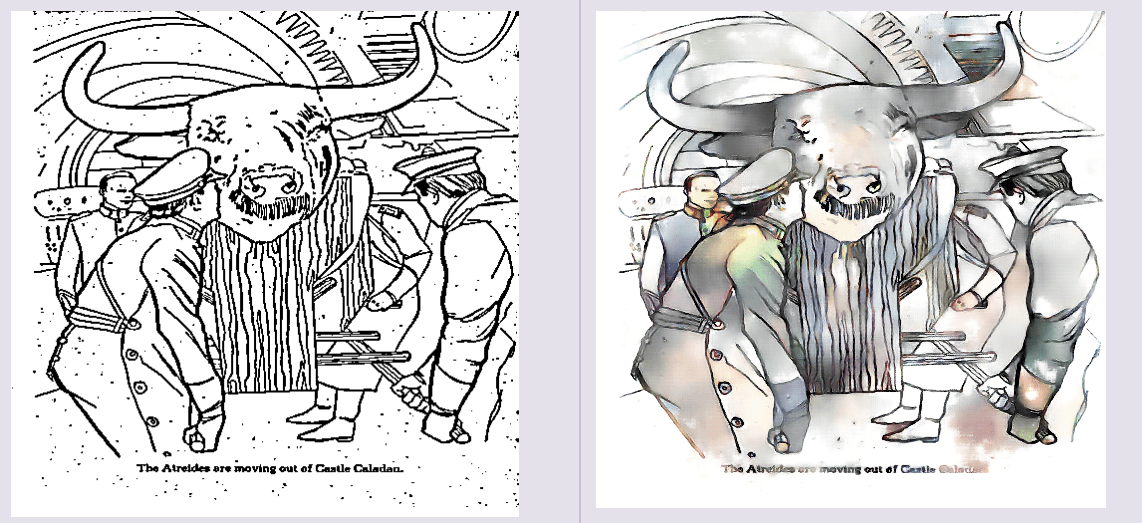

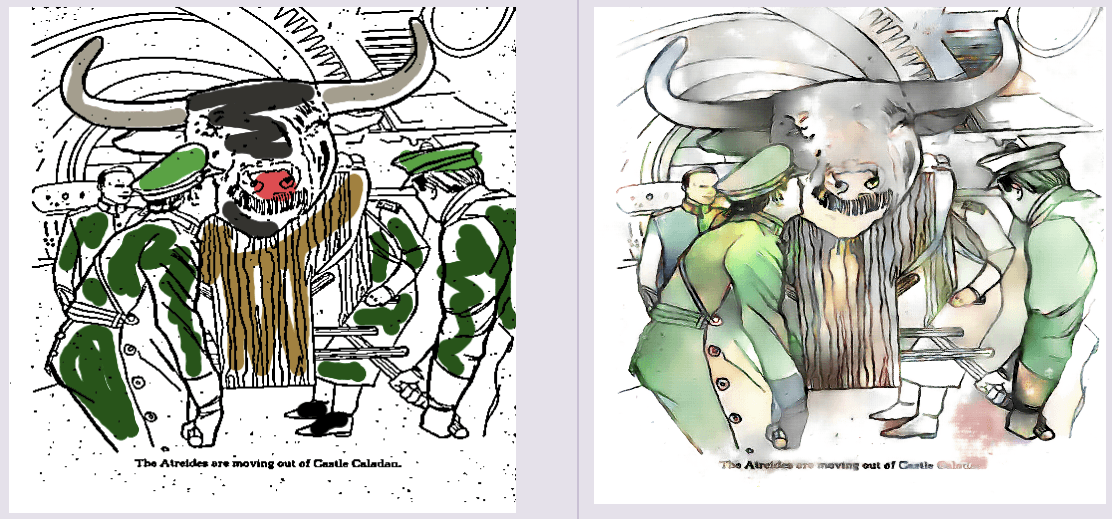

The goal of this post is to describe the process taken to colorise the drawings within the Dune coloring book. There will be two ways to colorise. Unassisted and Assisted; meaning we're able to give the network hints.

Project Setup

All the workings outlined in this post can be found in the t04glovern/deep-dune-coloring repository. I recommend pulling this down before starting to work through this post. Use the following command to pull it down

git clone https://github.com/t04glovern/deep-dune-coloring.gitFrom within the repository, run the following command to pull down a copy of the Dune book. This will require the AWS CLI to be setup and while it isn't super required for this tutorial, it is a useful thing to have setup if you do plan on downloading my pre-trained model later on.

aws s3 cp s3://devopstar/resources/deep-dune-coloring/dune-coloring-book-remaster.pdf dune-coloring-book-remaster.pdfBook Extracting

The first step that needs to be completed is the dismantling of the PDF coloring book into individual pages. Although this does seem like a mildly pointless task that could be done manually, when taking into account that there are 52 pages it becomes more apparent that a programmatic method would be helpful.

I've written a simple conversion script convert.sh that can be run in order to extract and dump the PDF pages out using imagemagick.

#!/bin/sh

## Convert PDF to images

mkdir dune

convert -verbose -colorspace RGB -resize 1000 -interlace none -density 300 -quality 80 dune-coloring-book-remaster.pdf[0-25] dune/image.png

## Split images into pages

cd dune

mkdir pages

for file in `ls *.png`; do

convert -crop 50%x100% +repage `echo $file` `echo pages/$file | sed 's/\.png$/\.%01d.png/'`

doneIf you don't have access to imagemagick or would prefer to skip this step, you can also download the processed images using the following command.

aws s3 sync s3://devopstar/resources/deep-dune-coloring/dune dune/Deepcolor

Deepcolor is really the heart of this repository. Build by Kevin Frans is performs coloring and shading of manga-style lineart, using Tensorflow + CGAN. I've included a version of his code-base within the deepcolor folder of this repository.

NOTE: I was initially tossing up on adding it as a sub-module, however I found a number of small bugs with the code that is now over 2 years old and built for an old version of TensorFlow.

Setup Folders

There are a couple folder that will need to exist for when we begin to training a new model. In order to populate these, run the following commands.

cd deepcolor

mkdir results

mkdir imgs

mkdir samplesPython Environment

Setting up the Python environment for this project can be done using two methods. The first is much easier and relies on you having Conda setup. Run the commands suitable for your configuration

## GPU

conda create -n tensorflow_gpuenv_py27 tensorflow-gpu python=2.7 numpy

conda activate tensorflow_gpuenv_py27

pip install opencv-python untangle bottle

## CPU

conda create -n tensorflow_env_py27 tensorflow python=2.7 numpy

conda activate tensorflow_env_py27

pip install opencv-python untangle bottleIf you haven't got Conda, you will need to use your local python installation. Below are the commands that should get you up and running. I'm aware it isn't super ideal, so I would definitely recommend using Conda until I've ported the code base to Python 3

# Using Python2.7

pip install --upgrade pip

pip install tensorflow numpy opencv-python untangle bottleTraining

To train our model we first need to get access to a large set of training data. For this we're just doing to follow the same method that deepcolor used and pull down a large number (>10000) images from Safebooru.

There is a script within the deepcolor folder called download_images.py that will do this for you however it will take a pretty long time and I would instead recommend using the training images from my S3 bucket. Run one of the following depending on what you decide to do.

# From within deepcolor/

#

# Pull from Safebooru directly

python download_images.py

# Pull from S3

aws s3 sync s3://devopstar/resources/deep-dune-coloring/imgs imgs/When you have the images downloaded, begin training by running the following from within the deepcolor folder

python main.py trainWhile training is running you can view examples of the output each 200 iterations by viewing the images in the results folder.

Pre-trained Model

If you would like to use the pre-trained model you can pull down a copy from my S3 (If this gets hammers I will remove access to it. Contact me @nathangloverAUS on twitter if you would like access)

aws s3 sync s3://devopstar/resources/deep-dune-coloring/checkpoint checkpoint/This should give you a folder structure like the following within the deepcolor folder

checkpoint/

tr/

checkpoint

model-10900500.index

model-10900500.data-00000-of-00001

model-10900500.metaSampling

When you have finished training you can run a sampling job over the current model on the test set using the following command

python main.py sampleIt will spit out 100 sample images sets into the samples folder within deepcolor. Each of these samples will have a line, color, final and original version.

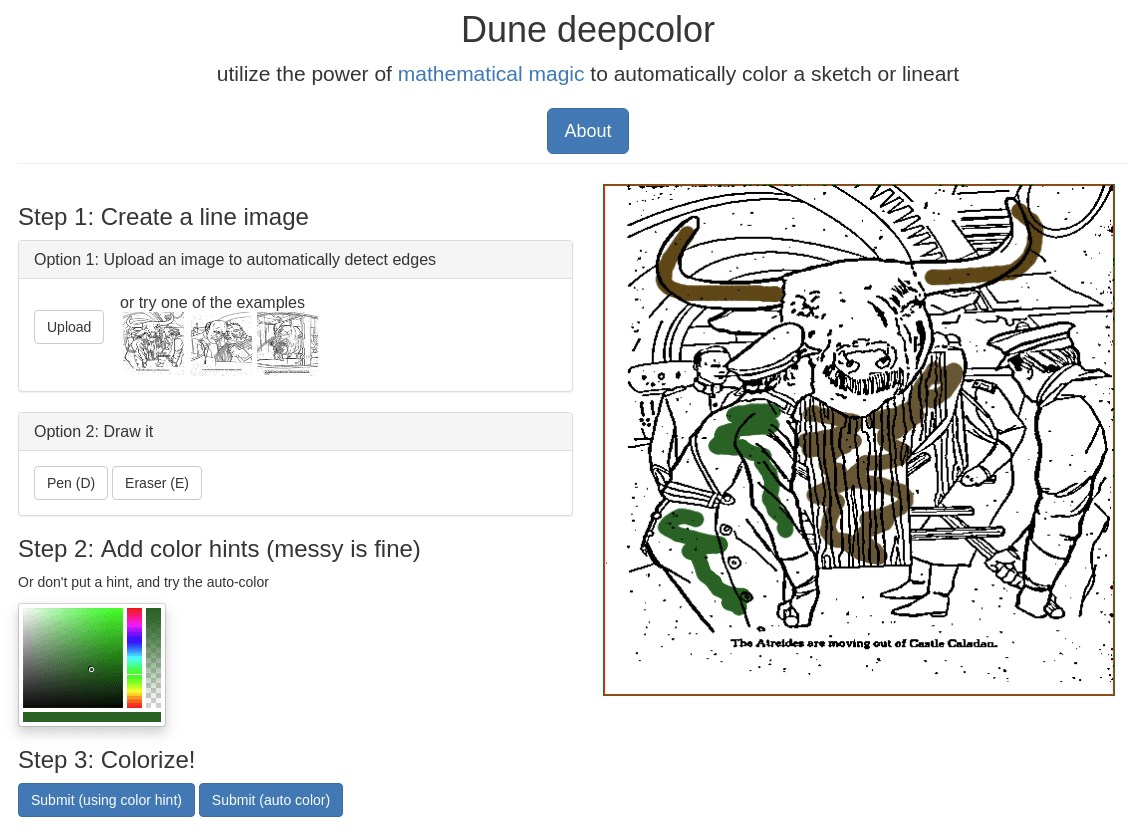

Web Interface

Once you have either run the training task (and have a checkpoint folder) or have downloaded the pre-trained model using the command above you should be able to run the web interface that can be used to interact with the model

python server.pyOnce the server starts up it is accessible on http://localhost:8000. Alternatively navigate directly to http://localhost:8000/draw and you'll be able to interactively assist the model in generating and output.