Route53 External DNS management from GKE

The idea of Hybrid cloud seems to be becoming more and more popular; or at least the idea of it is really appealing to people. In theory it's a fantastic path to head down, however the more you try to stray off the one-cloud-fits-all trail, the more headaches you are faced with.

In this short post I'm going to go through what is required to get Kubernetes on Google (GKE) able to update Route53 records on AWS.

User Account for IAM

To update zones in AWS we'll need an IAM service account. The following CloudFormation template can create the very specific permissions for us. From the output we'll receive an Access & Secret key for us to use later within Kubernetes.

AWSTemplateFormatVersion: '2010-09-09'

Parameters:

Password:

NoEcho: 'true'

Type: String

Description: New account password

MinLength: '1'

MaxLength: '41'

ConstraintDescription: the password must be between 1 and 41 characters

Resources:

Route53User:

Type: AWS::IAM::User

Properties:

LoginProfile:

Password: !Ref 'Password'

Route53AdminGroup:

Type: AWS::IAM::Group

Admins:

Type: AWS::IAM::UserToGroupAddition

Properties:

GroupName: !Ref 'Route53AdminGroup'

Users: [!Ref 'Route53User']

Route53ChangePolicy:

Type: AWS::IAM::Policy

Properties:

PolicyName: Route53Change

PolicyDocument:

Statement:

- Effect: Allow

Action: 'route53:ChangeResourceRecordSets'

Resource: 'arn:aws:route53:::hostedzone/*'

Groups: [!Ref 'Route53AdminGroup']

Route53ListPolicy:

Type: AWS::IAM::Policy

Properties:

PolicyName: Route53List

PolicyDocument:

Statement:

- Effect: Allow

Action: ['route53:ListHostedZones', 'route53:ListResourceRecordSets']

Resource: '*'

Groups: [!Ref 'Route53AdminGroup']

Route53Keys:

Type: AWS::IAM::AccessKey

Properties:

UserName: !Ref 'Route53User'

Outputs:

AccessKey:

Value: !Ref 'Route53Keys'

Description: AWSAccessKeyId of new user

SecretKey:

Value: !GetAtt [Route53Keys, SecretAccessKey]

Description: AWSSecretAccessKey of new userTo deploy the template above, run the following command from your terminal; assuming you have the AWS CLI setup and saved the gist above as route53.yaml

aws cloudformation create-stack \

--stack-name iam-route53-user \

--template-body file://route53.yaml \

--parameters ParameterKey=Password,ParameterValue=$(openssl rand -base64 30) \

--capabilities CAPABILITY_IAMNote: you can replace the _$(openssl rand -base64 30)_ piece and replace it with your own password if desired, however we won't ever need to login with this user account directly anyway.

Retrieve the access credentials for this service account by creating a bash file with the following contents, then running it.

#!/bin/sh

# Wait for stack to finish creating

aws cloudformation wait stack-create-complete --stack-name iam-route53-user

# Get AccessKey to variable

ACCESS_KEY=$(aws cloudformation describe-stacks --stack-name iam-route53-user \

--query 'Stacks[0].Outputs[?OutputKey==`AccessKey`].OutputValue' \

--output text)

# Get SecretKey to variable

SECRET_KEY=$(aws cloudformation describe-stacks --stack-name iam-route53-user \

--query 'Stacks[0].Outputs[?OutputKey==`SecretKey`].OutputValue' \

--output text)

# Save external-dns.yaml file with credentials

tee <<EOF >./external-dns.yaml

provider: aws

aws:

secretKey: '$SECRET_KEY'

accessKey: '$ACCESS_KEY'

rbac:

create: true

EOFThe results should be a file called external-dns.yaml with a secretKey & accessKey defined.

Note: Be sure NOT to share these details with anyone. They will be able to change your hosted zones using the credentials.

Kubernetes (GKE) External DNS

Now that we have our service account defined in external-dns.yaml, copy it over to a server that is able to manage your Kubernetes cluster and run the following commands to deploy it. We make use of the external-dns helm chart.

# Create tiller service account & cluster role binding

wget https://raw.githubusercontent.com/t04glovern/gke-dm-bootstrap/master/k8s/rbac-config.yaml -O rbac-config.yaml

kubectl create -f rbac-config.yaml

# init helm with the service account

helm init --service-account tiller --history-max 200

# Install External DNS

helm install \

--name external-dns stable/external-dns \

-f external-dns.yamlNote: I've included the helm initialisation steps, they might not be required if you have setup helm and Tiller already.

And that's it! You should have External DNS configured and ready to use. In the next step we'll deploy an Nginx Ingress Controller that will update our hosted zone.

Nginx Ingress Controller

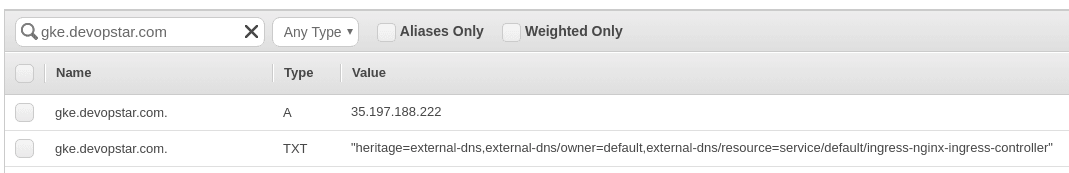

Our ingress controller will be the nginx-ingress helm chart. This chart requires a values file with the following contents in order for it to update DNS for us. Obviously be sure to update the DNS entry with your own hosted zone A record.

controller:

service: {

annotations: {

external-dns.alpha.kubernetes.io/hostname: gke.devopstar.com

}

}Save the above in a file like values.yaml the run the following to deploy the controller.

helm install \

--name ingress stable/nginx-ingress \

-f values.yamlAfter the deployment succeed, checking Route53 should show you that a record has been created with the ingress controllers public IP

Clean Up

Cleaning up this deployment is as simple as removing the IAM user from AWS. This is done by running the following delete on the CloudFormation stack

aws cloudformation delete-stack --stack-name iam-route53-user