Serverless (kinda) Containers with Google Cloud Run

Cloud Run is Google's new solution to running serverless containers exposing HTTP endpoints. It was recently announced at Google Next '19 and seems like it might be a reasonable solution to a traditional problem with containers I've been running into more often recently.

The Pitch

Lets present a use case where I'd like to serve up some kind of stateless web instance, but

- I don't want to be managing a container cluster or

- deal with any complexity around breaking my site up into serverless functions and static hosted content.

In previous posts I've used Fargate as my go to container hosting provider mostly due to the flexibility and low management overhead. The issue has always been that the services I deploy onto Fargate need to be running all the time waiting for requests. Even with good scaling policies the costs associate with running even just a cluster start to become really difficult to justify.

In comes Cloud Run

Cloud Run is filling a void that exists around deploying small stateless services in a much more serverless way. All you have to do is upload and submit a container that adheres to the runtime contract, and you're good! the key things are:

- Service must be stateless

- Container has to start a HTTP server within 4 minutes of a request being made

- Port exposed within the container must be 8080

- Maximum memory available is 2 GiB

- 1 CPU is allocated

Cloud Run is build from Knative and lets you run the same workflow on either the managed service provided by Google or your own Kubernetes engine... on Google.

Let's Try it

I certainly have my doubts, but I thought I'd at least give the service a shot to see if it can handle some weird workflows. Specifically I'm going to be redeploying the OpenAI GPT-2 text generator container that we built in Exposing PyTorch models over a containerised API.

The reason for this is that I want to expose the GPT-2 functionality to the world without worrying about the mammoth costs I would be racking up running the behemoth Fargate cluster.

We're going to attempt to use both variations of Cloud Run, the managed service and GKE in GCP.

Cloud Run Setup

Through this tutorial we'll be using the code found in https://github.com/t04glovern/gpt2-k8s-cloud-run. You can use the following command to pull the repo down

git clone https://github.com/t04glovern/gpt2-k8s-cloud-run.gitI'm not going to go over the specifics around what all the code in the repository is for, however if you want a much better understanding I recommend you read the previous post. You will however need to pull down the model using the following

mkdir models

curl --output models/gpt2-pytorch_model.bin https://s3.amazonaws.com/models.huggingface.co/bert/gpt2-pytorch_model.bingcloud CLI

Another thing we'll need is the gcloud CLI and a project to work with. I hate to sound like a broken record but I recommend checking out my post on Containerizing & Deploying to Kubernetes on GCP for the specifics about setting all this up.

Docker

Finally, you need to ensure that you have docker installed on your system along with docker-compose. We need Docker to be able to build and push our container to GCR (Google Container Registry).

Open up the container_push.sh script and change the PROJECT_ID to be equal to the one you setup in GCP.

#!/usr/bin/env bash

PROJECT_ID=devopstar

# Set gcloud project

gcloud config set project $PROJECT_ID

# Authenticate Docker

gcloud auth configure-docker

# Build Container

docker build -t gpt-2-flask-api .

# Tag Image for GCR

docker tag gpt-2-flask-api:latest \

asia.gcr.io/$PROJECT_ID/gpt-2-flask-api:latest

# Push to GCR

docker push asia.gcr.io/$PROJECT_ID/gpt-2-flask-api:latestThe script should look something like what's shown above, and when you're ready you can run the script to trigger a build, tag and push of the container to GCR.

Cloud Run GKE

To start with, we're going to use a Google Kubernetes Engine managed by Google. Purely because I've tried to run through this tutorial already and know that it only ends in heartbreak if we don't use GKE for this specific edge case.

NOTE: Cloud Run is perfectly fine for normal workloads. What I try to do with it in this post is a little unrealistic.

Making use of the following guide: https://cloud.google.com/run/docs/quickstarts/prebuilt-deploy-gke, we can run up a new cluster by first changing the PROJECT_ID in gke.sh and then running the script

#!/usr/bin/env bash

PROJECT_ID=devopstar

REGION=australia-southeast1

gcloud beta container clusters create "$PROJECT_ID-gpt2-demo" \

--project "$PROJECT_ID" \

--zone "$REGION-a" \

--no-enable-basic-auth \

--cluster-version "1.12.6-gke.10" \

--machine-type "n1-standard-4" \

--image-type "COS" \

--disk-type "pd-standard" \

--disk-size "100" \

--metadata disable-legacy-endpoints=true \

--scopes "https://www.googleapis.com/auth/cloud-platform" \

--num-nodes "3" \

--enable-stackdriver-kubernetes \

--enable-ip-alias \

--network "projects/$PROJECT_ID/global/networks/default" \

--subnetwork "projects/$PROJECT_ID/regions/$REGION/subnetworks/default" \

--default-max-pods-per-node "110" \

--addons HorizontalPodAutoscaling,HttpLoadBalancing,Istio,CloudRun \

--istio-config auth=MTLS_PERMISSIVE \

--enable-autoupgrade \

--enable-autorepairWait a little while while the massive feat of engineering spins up into existence. If you would like to see the status in more details check out the control plane.

Once started, you should be able to now deploy a Cloud Run service using just one command seen below

gcloud beta run deploy \

--image asia.gcr.io/devopstar/gpt-2-flask-api \

--cluster devopstar-gpt2-demo \

--cluster-location australia-southeast1-a

# Service name: (gpt-2-flask-api):

# Deploying container to Cloud Run on GKE service [gpt-2-flask-api] in namespace [default] of cluster [devopstar-gpt2-demo]

# ⠧ Deploying new service... Configuration "gpt-2-flask-api" is waiting for a Revision to become ready.

# ⠧ Creating Revision...

# . Routing traffic...The first time the container is deployed will take a while as the Node(s) in the cluster have to pull down the massive container. Once finished however, we need to get the istio gateway IP. This can be done by running the following

kubectl get svc istio-ingressgateway -n istio-system

export GATEWAY_IP=$(kubectl -n istio-system get service \

istio-ingressgateway \

-o jsonpath='{.status.loadBalancer.ingress[0].ip}')

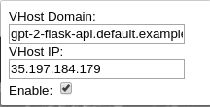

echo $GATEWAY_IPNow unfortunately we need to install a small chrome plugin to test the next bit as Istio doesn't expose a unique endpoint for our service and instead we need to route based on a Virtual host. Install the Virtual Host extension and set the configuration to the following

- VHost Domain: gpt-2-flask-api.default.example.com

- VHost IP: The gateway IP you receive from the command above

Visit http://GATEWAY-IP and you should be presented with the running service.

Delete Cluster

Clean up the cluster and Cloud Run task once you are happy with the GKE implementation so you aren't charged more money!

gcloud container clusters delete devopstar-gpt2-demoObviously replacing devopstar with the project ID you had.

Cloud Run GCP

We now know that everything should work when we deploy a similar application to Cloud Run as a managed service. To start with we'll have to ensure that the Cloud Run APIs are enabled. You can do this from the following URL: https://console.developers.google.com/apis/api/run.googleapis.com

Here's the best bit, run the following two commands to deploy to Cloud Run

# Set Cloud Run region

gcloud config set run/region us-central1

# Run

gcloud beta run deploy \

--image asia.gcr.io/devopstar/gpt-2-flask-api \

--memory 2Gi

# Service name: (gpt-2-flask-api):

# Deploying container to Cloud Run service [gpt-2-flask-api] in project [devopstar] region [us-central1]

# Allow unauthenticated invocations to new service [gpt-2-flask-api]?

# (y/N)? y

# ✓ Deploying new service... Done.

# ✓ Creating Revision...

# ✓ Routing traffic...

# Done.

# Service [gpt-2-flask-api] revision [gpt-2-flask-api-9eb49475-778f-4f11-8a5c-60d1ed3bd2ff] has been deployed and is serving traffic at https://gpt-2-flask-api-ulobqfivxa-uc.a.run.appNavigate to the URL provided and you should have access to the application! Now you can kinda understand why Cloud Run is such an interesting solution to the problem.

Issues with Memory

Unfortunately due to memory limits it doesn't look like we can use Cloud Run for this purpose at the moment...

# 2019-04-11T13:08:14.652058Z 16%|█▌ | 80/512 [04:23<29:04, 4.04s/it]

# 2019-04-11T13:08:18.862046Z 16%|█▌ | 81/512 [04:27<29:07, 4.06s/it]

# 2019-04-11T13:08:22.155164Z 16%|█▌ | 82/512 [04:31<29:24, 4.10s/it]

# 2019-04-11T13:08:26.152569Z 16%|█▌ | 83/512 [04:34<27:35, 3.86s/it]

# 2019-04-11T13:08:30.952013Z 16%|█▋ | 84/512 [04:38<27:49, 3.90s/it]

# 2019-04-11T13:08:34.552013Z 17%|█▋ | 85/512 [04:43<29:40, 4.17s/it]

# 2019-04-11T13:08:39.355836Z 17%|█▋ | 86/512 [04:47<28:23, 4.00s/it]

# 2019-04-11T13:08:43.051604Z 17%|█▋ | 87/512 [04:52<30:02, 4.24s/it]

# 2019-04-11T13:08:46.922621ZPOST504 234 B 300 s Chrome 73 /

upstream request timeoutHowever... It does appear that a request timeout can be set using the following update command. It allows a maximum of 15 minutes to be set.

# On Update

gcloud beta run services update gpt-2-flask-api \

--timeout=15m

# On Creation

gcloud beta run deploy \

--image asia.gcr.io/devopstar/gpt-2-flask-api \

--memory 2Gi \

--timeout=15mHowever, no dice unfortunately. It appears that the limited CPU and memory mean that I can't run the container on Cloud Run managed service. But to be honest I didn't have much hope it would work given the resource limits currently.

# 15 minute request only gets halfway

# 2019-04-12T12:59:52.451876Z 55%|█████▌ | 283/512 [14:23<06:42, 1.76s/it]

# 2019-04-12T12:59:57.242055Z 55%|█████▌ | 284/512 [14:26<08:26, 2.22s/it]

# 2019-04-12T13:00:02.343637Z 56%|█████▌ | 285/512 [14:31<11:19, 2.99s/it]

# 2019-04-12T13:00:06.045967Z 56%|█████▌ | 286/512 [14:36<13:39, 3.63s/it]

# 2019-04-12T13:00:09.955038Z 56%|█████▌ | 287/512 [14:40<13:40, 3.65s/it]

# 2019-04-12T13:00:14.548409Z 56%|█████▋ | 288/512 [14:44<13:54, 3.73s/it]Custom Domain

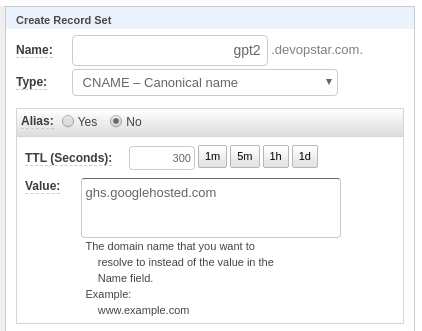

While I'm here I thought it would be worth checking out the custom domain options for fronting Cloud Run services. If you have a custom domain verified already you can also attached a sub-domain to the endpoint. Check / verify a domain you own to start with. (obviously replace with your own domain)

gcloud domains verify devopstar.comNext, map the service to a sub domain of your choosing

gcloud beta run domain-mappings create \

--service gpt-2-flask-api \

--domain gpt2.devopstar.com

# Creating......done.

# Mapping successfully created. Waiting for certificate provisioning. You must configure your DNS records for certificate issuance to begin.

# RECORD TYPE CONTENTS

# CNAME ghs.googlehosted.comIn your DNS provider, add a CNAME entry for your service

And that's pretty much it! Super easy and you'll also get a HTTPS endpoint. In my case I was able to access it over https://gpt2.devopstar.com

Summary

Closing out I think its worth saying that Cloud Run definitely solves a problem that a lot of traditionally long running web services could really benefit from. The use case I tried to shoe horn in wasn't realistic, although I'm hoping in the future it'll be more feasible if resource limits increase.